The latency between pressing a button and seeing it on the screen is amazing.

Oled_command "cursor", " #", "write", text Reconcile(prev_screen, next_screen) do |row, col, text|

#Digital typewriter keyboard code

Here’s what my code looks like now: def render (text) # Return the text I want to be on the screen end I love this model and I’m sure there are tons of applications for it outside the web development. I want a model where I can define piece of state (the text) and a function that defines what this should look like on the screen, without worrying about the underlying OLED API performance. Ideally, I don’t want to track the current screen position and code the drawing logic for each editing command. When I got to implementing word wrapping, it was too much - there are so many different states to transition between! And then even more complicated with paging. This got more complicated once I added support for backspace. So I changed the code to keep track of cursor position and advanced it as more characters were being printed on the screen.

#Digital typewriter keyboard full

Full display repaint using Omega’s OLED APIs takes as much as 400ms, totally unacceptable for a good experience. My initial version would just repaint the screen on every keystroke, which turned out to be very inefficient. Next, printing this stuff to OLED also had gotchas. That lead me to the ancient input.h, which I used to hack together a simple parser. Instead I found out that Linux has /dev/input/event* files where I could read raw events. I was looking for something like getc that can get data from a connected USB device, but no luck. However, it turned out to be much more complicated than that :)įirst, I couldn’t find a trivial way to read the text off of the keyboard. I thought it would be trivial, just read the keystrokes, pipe them to OLED and a text file, sync the text file over Dropbox. I bet I can hack this together with 1 line of bash.

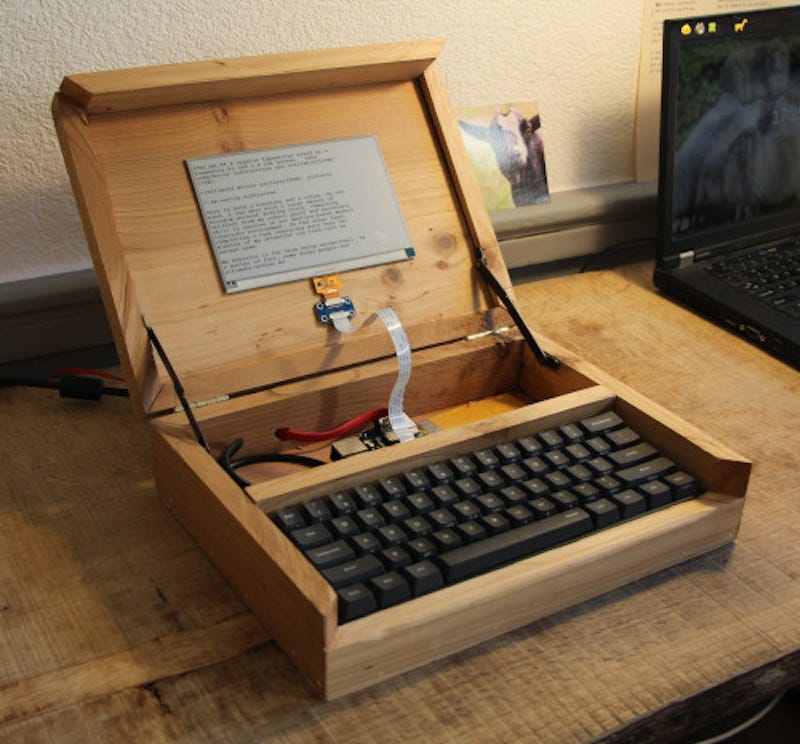

Anything I type there should immediately show up on the built-in OLED display and sync to “the cloud”. The idea was to connect my favorite keyboard ( HHKBPro2 type-S) via USB to the Omega2.

0 kommentar(er)

0 kommentar(er)